Enhancing State Synchronization in Asynchronous Distributed Systems via Timestamping Techniques

Introduction

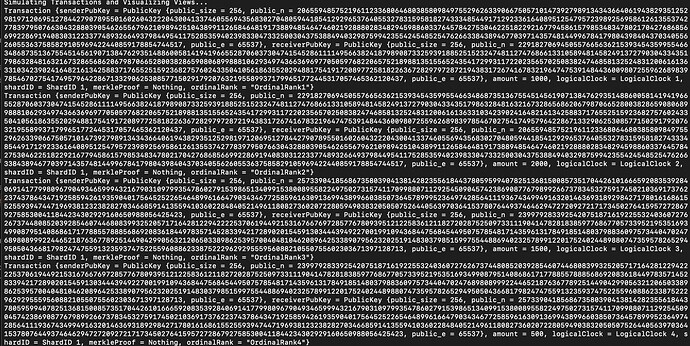

In distributed asynchronous systems, such as those embodied by the Sierpinski Merkle Trie (SMT) protocol, achieving efficient state synchronization among various shards poses significant challenges. This paper explores advanced timestamping techniques, specifically Lamport timestamps, dense timestamps, and vector clocks, to facilitate “as-is” snapshots for state synchronization. These mechanisms ensure consistency in the order of events across disparate shards, a critical aspect in maintaining the integrity of a distributed system.

Lamport Timestamps and Causal Relationships

Lamport timestamps provide a fundamental mechanism in distributed systems to establish causal relationships between events. They are based on a simple yet powerful principle: incrementing a counter (timestamp) for each event in the system. This section delves into the mathematical formalization of Lamport timestamps and provides an algorithm for updating them.

Mathematical Formalization

A Lamport timestamp, denoted as L, is an integer assigned to each event in a distributed system. For two events A and B, if A causally precedes B, then L(A) < L(B). This relationship is formalized as follows:

Theorem

If event A causally precedes event B in a distributed system, then the Lamport timestamp of A is less than that of B.

Proof

Assume two events A and B occur, where A causally affects B. By the definition of Lamport timestamps, when A occurs, its counter C_A is incremented. As B is causally affected by A, B's counter C_B is set to be at least C_A + 1. Therefore, L(A) = C_A < C_B = L(B).

Algorithm for Updating Lamport Timestamps

The algorithm to update Lamport timestamps upon the occurrence of an event or the receipt of a message is as follows:

L \leftarrow \text{Lamport Clock}

\text{EventOccurs()}

L \leftarrow L + 1

\text{ReceiveMessage}(M)

L \leftarrow \max(L, L(M)) + 1

Dense Timestamps for Chronological Ordering

Dense timestamps provide a high-resolution chronological order of events, facilitating precise snapshots of the state at any given time. These timestamps are especially valuable in systems where the granularity of temporal ordering is paramount.

Definition

A dense timestamp D is a real number that uniquely represents a moment in time with fine granularity. For two distinct events X and Y, D(X) \neq D(Y), ensuring unique temporal identifiers for each event.

Vector Clocks for State Synchronization

Vector clocks extend Lamport timestamps by enabling each shard in the system to maintain a summary of the information received from other shards. This summary aids in understanding the state of the system from the perspective of each shard.

Implementation

A vector clock V in a system of n shards is represented as an n-dimensional vector of integers. Each element V_i of the vector represents the latest timestamp of shard i.

V \leftarrow \text{Vector Clock}

\text{EventOccursAtShard}(i)

V_i \leftarrow V_i + 1

\text{ReceiveMessageFromShard}(j, V')

V \leftarrow \max(V, V')

Snapshot for State Synchronization

Each shard utilizes timestamping mechanisms to create a comprehensive snapshot of its state. This snapshot includes the causal and chronological history, enabling efficient synchronization.

Snapshot Generation Algorithm

The algorithm to generate a snapshot of a shard’s state is outlined as follows:

S \leftarrow \text{State of Shard}

\text{GenerateSnapshot()}

Snapshot \leftarrow \{S, V, D\}

\text{Return Snapshot}

Efficient Synchronization

The proposed method significantly reduces synchronization overhead by obviating the need to process all events sequentially. Instead, it relies on understanding the causal and chronological summary encapsulated in the snapshots.

Summary

The integration of Lamport timestamps, dense timestamps, and vector clocks offers a robust solution for efficient and accurate state synchronization in distributed systems, particularly those based on the SMT protocol. This methodology enables the creation of detailed snapshots of each shard’s state, capturing the complete sequence of events and allowing for “as-is” synchronization, a crucial feature for the consistency and efficiency of distributed asynchronous systems.