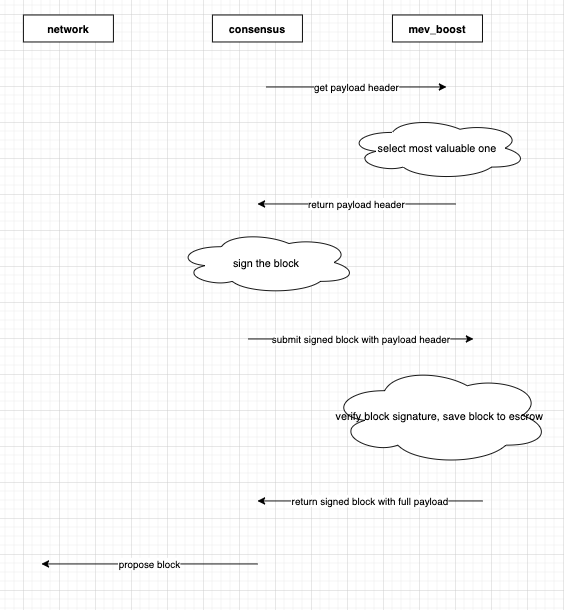

Had a fruitful conversation with @thegostep where we explored another scheme where consensus(validator) is the final actor that submits block to the network.

In this design:

1.) consensus requests ExecutionPayloadHeader from mev_boost

2.) mev_boost returns the most profitable to consensus

3.) consensus signs SignedBeaconBlock which contains ExecutionPayloadHeader and submits it to mev_boost

4.) mev_boost validates block signature and saves the block to escrow to enforce slashing in the event of frontrunning

5.) mev_boost returns SignedBeaconBlock with ExecutionPayload to consensus

6.) consensus validates SignedBeaconBlock again, saves it in the DB, and submits it to the network

Pros

1.) mev_boost and relay network no longer have to touch the consensus layer’s network stack. Easier to reason and simpler to implement

2.) beacon node / validator guaranteed to have full version of SignedBeaconBlock (ie Payload not PayloadHeader) to be saved in storage and not depend on network to gossip back previous signed block.

Note: same could be achieved in current scheme by just having mev_boost return the full block as it submits to the network

3.) consensus get to submits backup block in the event mev_boost fails to respond back signed block with full payload.

Note: this is dangerous and requires more consideration in the event mev_boost fails to respond back but still kept the good SignedBeaconBlock with ExecutionPayloadHeader, validator could be subjected to slashing with two versions of valid blocks

Cons

1.) consensus validator identity will become known to the mev_boost, the more validators connects to the same mev_boost software, the risk amplifies

This captures most of the notes. I will do more thinking around this scheme and evaluate further trade offs