There exist chunking strategies which take the control flow of a contract into account and are hypothesized to produce leaner proofs but are at the same time more complex. Before we have actual data from these approaches, we can estimate the saving that a hypothetically optimal chunking strategy would yield compared to e.g. the jumpdest-based approach.

To estimate this we can measure chunk utilization, which tells us how much code sent in the chunks were actually necessary for executing a given block. E.g. if for a tx we send one chunk of contract A, and only the first half of the chunk is needed (say there’s a STOP in the middle), then chunk utilization is 50%, the other half is useless code that has been transmitted only due to the overhead of the chunker.

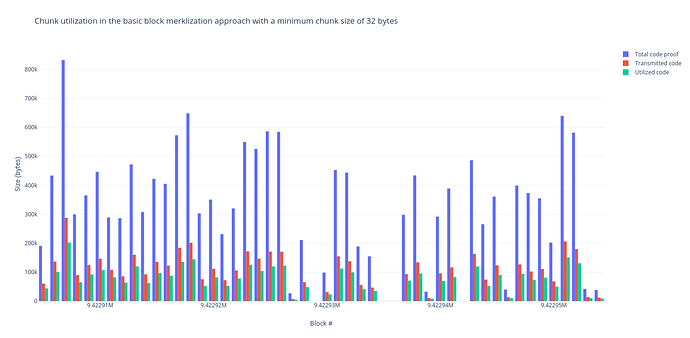

Above you can see average chunk utilization for 50 mainnet blocks is roughly 70% when using the jumpdest-based approach with a minimum chunk size of 32 bytes. That means the optimal chunking strategy could improve the code transmitted by 30%, but that itself is only part of the proof (which includes hashes, keys and encoding overhead). Assuming binary tries cut the hash part by 3x, there might be ~11-15% improvement in total proof size compared to the jumpdest-based approach.