Summary & TL;DR

The ProbeLab team (https://probelab.io) is carrying out a study on the performance of Gossipsub in Ethereum’s P2P network. This post is reporting the first of a list of metrics that the team will be diving into, namely, how efficient is Gossipsub’s gossip mechanism. For the purposes of this study, we have built a tool called Hermes (GitHub - probe-lab/hermes: A Gossipsub listener and tracer.), which acts as a GossipSub listener and tracer. Hermes subscribes to all relevant pubsub topics and traces all protocol interactions. The results reported here are from a 3.5hr trace.

Study Description: The purpose of this study is to identify the ratio between the number of IHAVE messages sent and the number of IWANT messages received from our node. This should be done both in terms of overall messages, but also in terms of msgIDs. This metric will give us an overview of the effectiveness of Gossipsub’s gossip mechanism, i.e., how useful the bandwidth consumed by gossip messages really is.

TL;DR: The effectiveness of Gossipsub’s gossip mechanism, i.e., the IHAVE and IWANT message exchange is not efficient in the Ethereum network. Message ratios between Sent IHAVEs and Received IWANTs can reach to more than 1:50 for some topics. Suggested optimisations and things to investigate to improve effectiveness are given at the end of this report.

Overall Results - Sent IHAVEs vs Received IWANT

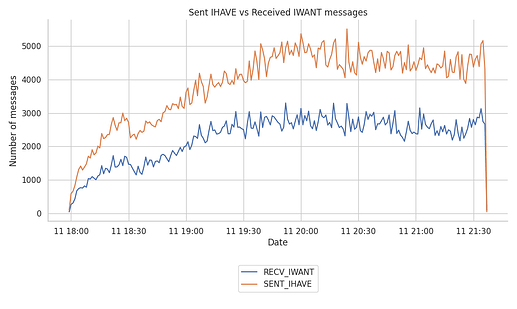

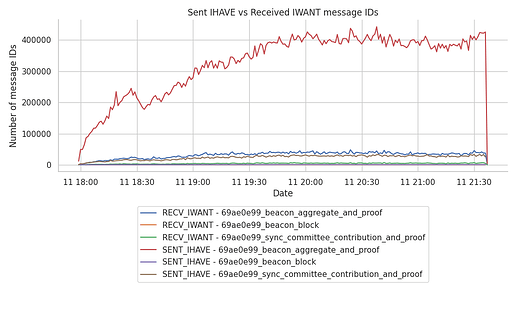

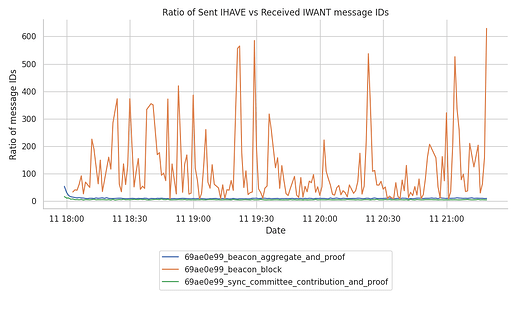

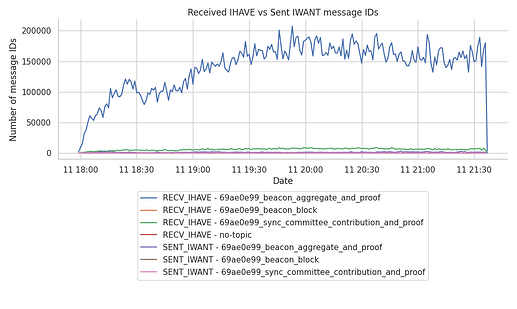

The plots below do not differentiate between different topics. They present aggregates over all topics. The ratio of sent IHAVEs vs received IWANTs does not seem extreme (top plot) with a ratio of less than 1:2, but digging deeper into the number of msgIDs carried by those IHAVE and IWANT messages shows a different picture (middle plot). The ratio itself for all three topics are given in the third (bottom plot), where we see that especially for the beacon_block topic the ratio is close to 1:100 and going a lot higher at times.

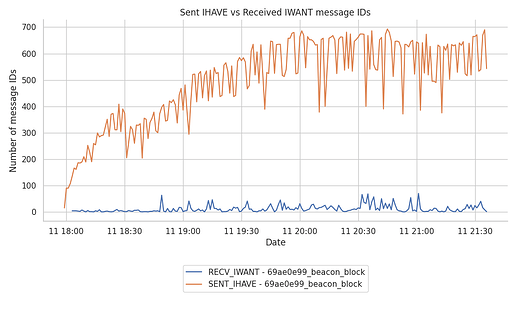

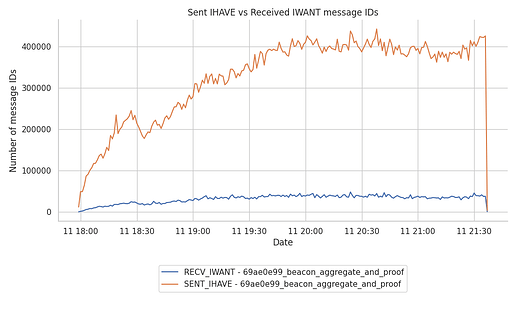

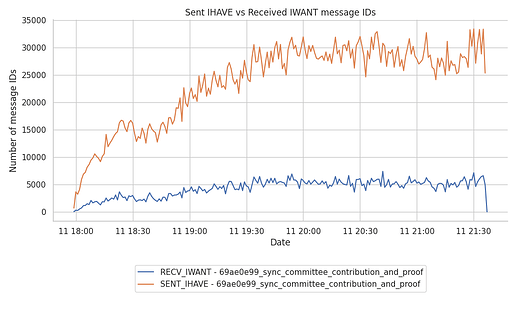

Per Topic Results - Sent IHAVEs vs Received IWANT

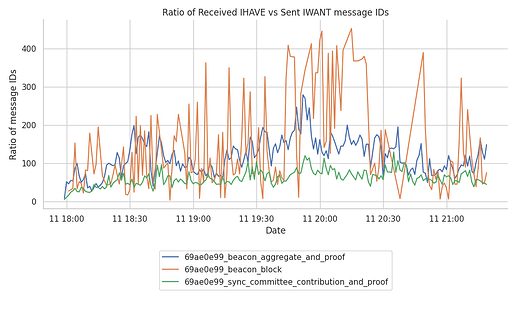

Next, we’re diving into the ratio per topic to get a better understanding of the gossip effectiveness for each topic. We’re presenting the overall number as well as the ratio per topic. The ratio of sent IHAVEs vs received IWANTs is more extreme and reaches an average of close to 1:100 for the beacon_block topic, 1:10 for the beacon_aggregate_and_proof topic and 1:6 for the sync_committee_contribution_and_proof topic.

It is clear that there is an excess of IHAVE messages sent compared to the usefulness that these provide in terms of received IWANT messages. There’s at least a 10x bandwidth consumption that we could optimise for if we reduced the ratios especially for the beacon_block and beacon_aggregate_and_proof topics.

The beacon_aggregate_and_proofs topic sends hundreds of thousands of message_ids over the wire in a minute, with very few IWANT messages in return. The ratio of sent IHAVE msgIDs to the received IWANT msgIDs stays around 10 times bigger.

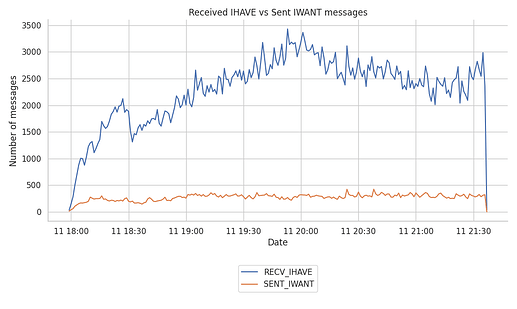

Overall Results - Received IHAVE vs Sent IWANT

The situation is even more extreme for the case of Received IHAVE vs Sent IWANT messages in terms of overhead. We include below the overall results only, as well as the ratios per topic. We consider that the ratios are even higher here because our node is rather well-connected (keeps connections to 250 peers) and therefore is more likely to be included in the GossipFactor fraction of peers that are chosen to send gossip to (i.e., IHAVEs). This in turn means that we must be receiving lots of duplicate msgIDs in those IHAVE messages. Digging into the number of duplicate messages are subject to a different metric further down in this report.

Anomalies

Gossipsub messages should always be assigned to a particular topic, as not all peers are subscribed to all topics. Having a topic helps with correctly identifying invalid messages and avoiding overloading of peers with messages they’re not interested in.

We have consistently seen throughout the duration of the experiment both IHAVE and IWANT messages sent to our node with an empty topic. Both of these are considered anomalies, especially given that the IWANT messages we received were for msgIDs that we didn’t advertise through an IHAVE message earlier.

Digging deeper into the results, we have seen that 49 out of the 55 peers that we received messages with an empty topic were Teku nodes. We have started the following Github issue to surface the anomaly: Possible Bug on GossipSub implementation that makes sharing `IHAVE` control messages with empty topics · Issue #361 · libp2p/jvm-libp2p · GitHub, which has been fixed: Set topicID on outbound IHAVE and ignore inbound IHAVE for unknown topic by StefanBratanov · Pull Request #365 · libp2p/jvm-libp2p · GitHub.

Takeaways

- The average effectiveness ratio of the gossip functionality is higher than 1:10 across topics, which is not ideal.

- Messages that are generated less frequently (such as

beacon_blocktopic messages) are primarily propagated through the mesh and less through gossip (IHAVE/IWANTmessages), hence the higher ratios, which reach up to 1:100 for this particular topic. - GossipSub control messages are relevant, but we identify two different use-cases for GossipSub that don’t benefit in the same way from all these control messages:

- Big but less frequent messages → more prone to

DUPLICATEDmessages, but with less overhead on theIHAVEcontrol side. The gossiping effectiveness is rather small here. - Small but very frequent messages → add significant overhead on the bandwidth usage as many more

msg_idsare added in eachIHAVEmessage.

- Big but less frequent messages → more prone to

Optimisation Potential

Clearly, having an effectiveness ratio of 1:10 or even less, i.e., consuming >10x more bandwidth for IHAVE/IWANT messages than actually needed, is not ideal. Three directions for improvement have been identified, although none of them has been implemented, tested, or simulated.

- Bloom filters: instead of sending

msgIDsinIHAVE/IWANTmessages, peers can send a bloom filter of the messages that they have received within the “message window history”. - Adjust

GossipsubHistoryGossipfactor from 3 to 2: This requires some more testing, but it’s a straightforward item to consider. This parameter, set to 3 by default [link], defines for how many heartbeats do we sendIHAVEmessages for. Sending messages for 3 heartbeats ago obviously increases the number of messages with questionable return (i.e., how manyIWANTmessages do we receive in return). - Adaptive

GossipFactorper topic: As per the original go implementation of Gossipsub [link], theGossipFactoraffects how many peers we will emit gossip to at each heartbeat. The protocol sends gossip toGossipFactor * (total number of non-mesh peers). Making this a parameter that is adaptive to the ratio of SentIHAVEvs ReceivedIWANTmessages per topic can greatly reduce the overhead seen.- Nodes sharing lots of

IHAVEmessages with very fewIWANTmessages in return could reduce the factor (saving bandwidth). - Nodes receiving a significant amount of

IWANTmessages through gossip could actually increase theGossipFactoraccordingly to help out the rest of the network. - There is further adjustments that can be made if a node detects that a big part of its messages come from

IWANTmessages that it sends. These could revolve around increasing the mesh sizeD, or rotating the peers it has in its mesh.

- Nodes sharing lots of

For more details and results on Ethereum’s network head over to https://probelab.io.