Many thanks to Vitalik (EF), Justin (EF), Kelvin (OP) and Gabriel (Cartesi) for feedback and discussion.

The goal of this post is to bootstrap a discussion around a socially agreeable challenge period lower bound for optimistic rollups and, by consequence, around Ethereum’s strong censorship resistance guarantees.

Today, optimistic rollups add up to 91.9% of the total value secured by all rollups. We (L2BEAT), recently started to enforce a ≥7d challenge period requirement to be classified as Stage 1, which has sparked a debate on where this number comes from and how we can assess if it is appropriate. We feel it’s time to better formalize this value and either update it or ratify it as the community standard, as some projects have already started to argue that lower challenge periods might be equivalently safe.

Why do we have the 7d challenge period in the first place?

The biggest misconception around challenge periods is the belief that they are set based on the time it takes to perform the interaction between two parties in a multi-round challenge. If the number of interactions is reduced, at the extreme to 1 with non-interactive protocols (example), it is sometimes suggested that the challenge period can be significantly reduced too.

The reality is that the challenge period has been originally set to allow a social response in case of a 51% consensus attack and prevent funds from being stolen. It’s important to note that, on L1, a strong censorship attack cannot cause funds sitting on a simple account to be stolen.

As far as we know, the details of such social response have never been precisely discussed.

Background: how optimistic rollups keep track of time

There are two main ways optimistic rollups keep track of the time left to participate in a challenge: either with a global timer, or with a chess clock model. The first type is the simplest form and it is mainly employed by single-round challenge protocols.

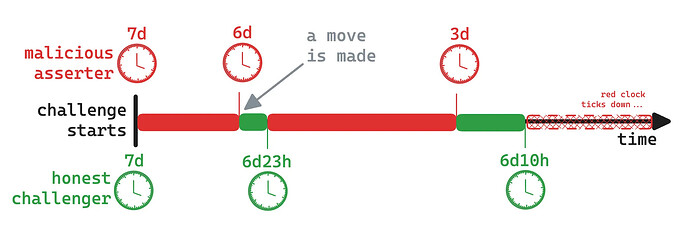

The second type is used by multi-round protocols such as OPFP, BoLD or Dave, to prevent the attacker from wasting the honest players available time by not acting when it’s their turn. In practice, for each challenge two clocks are created, one for the asserter and one for the challenger, and the time from a clock is consumed only when it’s its owner time to make a move, and stops from getting consumed when it’s the other player’s turn. If a clock runs out of time, the other players win.

Funds can be compromised when honest players cannot perform their moves due to a strong censorship attack, causing their clocks to expire.

In this post we’ll focus on the chess clock model, as it represents most projects and because the global timer model case can be trivially deduced from it.

Tentative social response in case of strong censorship attacks

For simplicity, let’s assume that censorship is sustained, i.e. it is performed with no breaks. Moreover, we assume L1 rollbacks and L1 rollup-specific invalid state transitions to be a highly controversial and not desirable social response that should be avoided where possible.

We sketch the following timeline:

- (0h → 24h): a 51% strong censorship attack is detected.

- (24h → 6d): a hard fork is coordinated, implemented and activated to slash censoring validators.

- 1d: the time left on the honest players’ clock to play the game.

This social response successfully prevents a “51%” attack on optimistic rollups with no rollbacks only if all of the following hold true: (1) we are able to detect censorship within 24h; (2) we are able to coordinate, implement and activate a hard fork within 5d; (3) challenge protocols are efficient enough such that they can be played by consuming less than 1d when no censorship is present.

There is a caveat though: the above is true if we take the “51%” value literally, while it fails if the attack gets reiterated even after the hard-fork (…76% attack?). A simple solution is to allow contracts to query the timestamp of the latest hard-fork and extend clocks if necessary, but it requires a protocol upgrade.

In practice, an attacker can just delay the honest party’s transactions such that the clock expires before the last move is made. Assuming ~70 steps (as in Arbitrum’s BoLD) needed to reach the final step, where 35 would be executed by the honest parties, and clocks of 7 days, the attacker can delay each move by ~5 hours every ~5 hours, consuming both its own clock and the honest party’s clock. For the above timeline to hold, we should be able to detect such censorship attacks within 1 day too.

The alternative: socially accept that (endgame) optimistic rollups are less secure than (endgame) ZK rollups

Today, Ethereum provides around $100B of economic security, which is around 2x the total value secured by all L2s settling on Ethereum. We might accept strong censorship attacks to be extremely unlikely and abandon the idea that optimistic rollups should remain safe around them. In this case, the challenge period only needs to be long enough to protect from soft censorship attacks (i.e. builder-driven) and provide enough time to compute the necessary moves.

Let’s say we want optimistic rollups to be safe up to 99% soft censorship attacks while considering a 99.99% inclusion probability: we then would need to provide at least 3h for each tx to be included on L1, and given ~35 steps needed per player to complete an interactive challenge, we’d need to provide at least ~4.5d. The activation of FOCIL would provide much more significant results and potentially reduce the period, under this threat model, to a safe value of just a couple of days.

Addendum: resources on strong censorship detection

Vitalik Buterin (2017): suspicion scores

Calculate the longest amount of time that the client has seen that a vote could have been included in the chain but was not included, with a “forgiveness factor” where old events are discounted by how old they are divided by 16.

Some modifications to Casper FFG to extend epochs if not finalized are proposed.

Vitalik Buterin (2019): responding to 51% attacks in Casper FFG

Discusses attester censorship and automated soft forks in response.

Vitalik Buterin (2020): timeliness detectors

The explicit goal is to detect 51% attacks, identify the “correct” chain and which validators to “blame”. The mechanisms tries to identify blocks that arrived unusually late, with a commonly agreed synchrony bound. It involves introducing the concept of a timeliness committee to achieve agreement.

Sreeram Kannan (2023): Revere, an observability gadget for attester censorship in Ethereum

It’s much easier to resolve from proposer censorship because even a small fraction of honest proposer can provide censorship resistance. Much harder to protect from attester censorship. Solution: increase observability of uncle blocks. How? Not easy to distinguish uncle blocks because of censorship or network censorship. Add rule: a proposer has to include transactions from uncle blocks. Use light clients that enhance observability for everyone by tracking headers.

Ed Felten (2023): onchain censorship oracle

Onchain censorship detection. The test says that either there as not been significant censorship, or maybe there has been censorship. Idea: measure onchain the number of empty (consensus) slots. With confidence level of p=10^-6, and assuming 10% of honest proposers, you can report censorship if there are more than 34 empty slots out of 688. Issue: the adversary can wait to launch the attack until it knows that the next 64 proposers will all be malicious. Mitigation: add 64 slots to the length of the test. Another issue: if the test fails, you might want to do it again over the following n blocks, but that’s unsound (p-hacking). Mitigation: if we want repetition then each test needs to have more confidence. Abandoned in 2024 because of some attack vector.