which depends on some combination of their personal preferences and network effect. Collective welfare = sum of everyone’s utility.

I remember discussing the article with you when you first posted it (indeed iirc, it was the impetus for me to create the ecoinomia cryptotwitter account, so I should thank you belatedly for kicking it off), and back then we discussed local vs global effects, so let me focus on this aspect of the model.

Back when I wrote my dissertation, I briefly used the term “endogenous preferences” to describe this “some combination of personal preferences and network effect”, defining endogenous preferences informally as “If you go to a movie theater, your ultimate choice of which movie to see depends in part on whether you go by yourself, with your buddies, your spouse, or your parents.”

This terminology was voted down very quickly (for spurious reasons), so I stopped using it. But it has another, very serious flaw: It doesn’t reflect endogenous preference, it reflects endogenous choice.

And the big problem economics has with network effects that I had to contend with is that under non-trivial demand-side interaction effects the all-encompassing Axiom of Revealed Preference breaks down. You can no longer impute from an observed choice (a fraction of) the underlying preference ordering: How can an outsider tell from watching you go to a movie with your parents whether you picked your preferred movie, or whether you just tagged along with your parents to make them happy? There is an underlying, typically unobservable and often tacit, negotiation process between involved parties to come to a (pooling or separating) equilibrium choice.

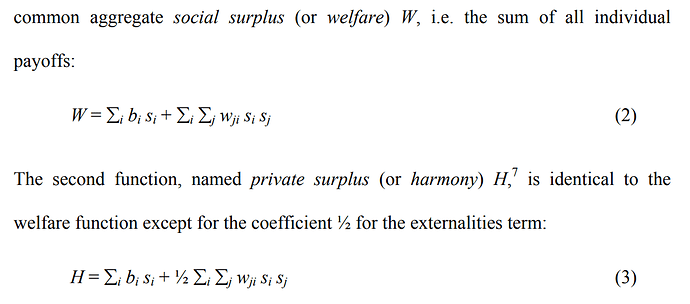

It’s an understandable modeling simplification to treat preference and (what I ended up calling) influence factors as similar, to be able to simply add them up into a personal utility function. I did exactly that. But its problematic to extract welfare implication from just aggregating those individual utility functions. (And yes, it’s still widely done in economics). Because doing so means you reinstate the Axiom of Revealed Preference in a situation where it doesn’t work.

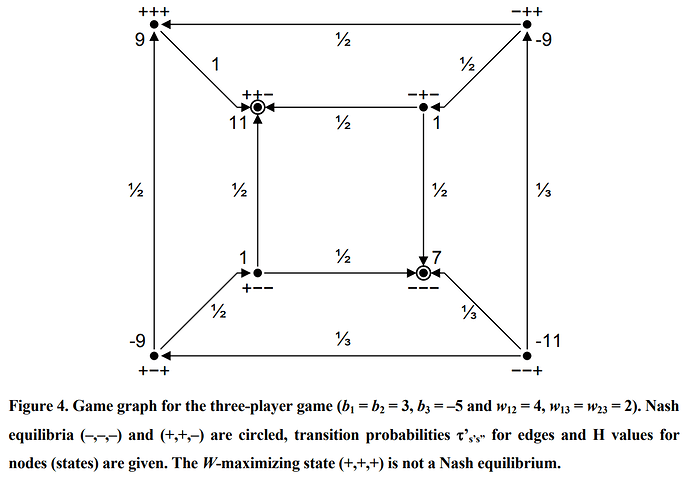

For me the intellectual breakthrough came when I read North & Thomas’s Rise of the Western World, especially the footnote on page 1 (I tweeted about it before). I was trying to figure out how to explain that in a setup of personal choice function = personal preferences + (factor) * network effects, with factor = 0 means welfare is purely driven by preference considerations, and factor = 1 means preference and influence hold equal weight, my interacting group would maximize a global function where this factor was ½ (leading to suboptimal convergence).

The answer came in North & Thomas’s footnote explaining the distinction between “private returns” and “social returns”. The difference is that social returns aggregate influence factors twice, “to me” and “from me”, while private returns aggregate them only once: “to me”. In the absence of active altruism, even a community bent on coordinating their actions might fail to do so, and some circumstances even fail suboptimally. In the case of symmetric influences, this leads to a factor ½ for the “relevance” of network effects in welfare economics.