I’m finishing up an article on commitment strategies as solutions to coordination games and I’m curious if this is a well-known phenomenon around here. I’m assuming it is, but is there a name for this?

The argument is that for any on-chain Schelling Point, a suitably funded commitment strategy can always enforce a different equilibrium for rational agents. The commitment strategy can be just basically “I commit to losing additional amount x if I don’t get my way”, which commitment then means you know the person will try very, very hard to get their way. If x is sufficiently large, then it’s rational to let them get their way. And since it’s rational to do so, this commitment was free. That’s the really tricky part–effective commitment strategies can be “off-equilibrium” and so someone can enforce their preferred outcome at 0 cost, in theory.

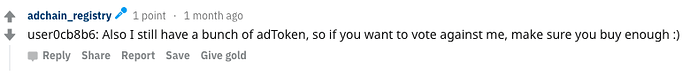

I discussed this strategy last month in the context of Fomo-3D, and Tarrence Van As pointed me to a recent illustration of this in Adchain’s Token Curated Registry (a coordination game). The founder of SpankChain appeared to use a commitment strategy to help his business get added to a TCR:

As far as I can tell, this seems to be a general vulnerability of on-chain “Schelling Point” models–they can be solved with commitment strategies (or, more accurately, they aren’t Schelling Points at all.) Hopefully that point is clear enough. When someone institutes a effective commitment strategy then the Pareto solution is always going to be choosing their preferred equilibrium (because value is destroyed in other cases-- that’s what the commitment strategy does.) The resulting outcome might not always be free for the committer but it should always result in their preferred outcome, regardless of whether it’s the right one.

Comments appreciated.