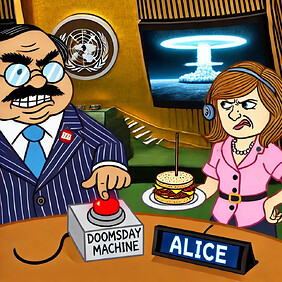

Smart Contract Commitment Attacks

“But, how is it possible for this thing to be triggered automatically, and at the same time impossible to untrigger?”

President Merkin Muffley

“Mr. President, it is not only possible, it is essential. That is the whole idea of this machine, you know. Deterrence is the art of producing in the mind of the enemy… the fear to attack. And so, because of the automated and irrevocable decision making process which rules out human meddling, the doomsday machine is terrifying. It’s simple to understand. And completely credible, and convincing.”

Dr. Strangelove

In the film Dr. Strangelove or: How I Learned to Stop Worrying and Love the Bomb, the USSR has a new method of nuclear deterrence; they have buried bombs that are programmed to trigger automatically if there is ever an attack. These bombs blow up the whole world, rather than just the enemy, but the deterrence effect still holds. If the motherland were attacked, the poor guy with the nuclear codes might have a hard time actually following through on the destruction of the whole planet, but a computer would do just fine. Thinking a step back, an enemy of the USSR might assume that no one would follow through on the threat of detonating the buried bombs out of a desire for self-preservation, and attack anyway. But that same enemy would be properly deterred if they knew the watchful and unyielding computer would end the world if so much as the tip of an airplane wing crossed into its territory. However, if they were unaware of the threat, this clearly would have no effect (in fact, this is the premise of the movie). Here, we see the power of irrevocable commitments – as long as everyone knows about them, as rightly pointed out by Dr. Strangelove himself. Not only can these irrevocable commitments be used for deterrence, but there are ready extensions towards coercion.

Image generated by DALL-E.

Imagine Alice and Boris are rational politicians at the UN and Alice takes the last BLT from the cafeteria. Imagine further that Boris has access to a doomsday machine and wants Alice’s sandwich. If he simply tells Alice, “Give me your sandwich or I’ll blow up the world,” Alice might be disinclined to comply. She would know that if she does not give up her sandwich, Boris would find much less utility in the fiery demise of the planet than in settling for a lesser lunch. So Alice can keep her sandwich even if Boris waves the nuclear detonator under her nose. If we introduce irrevocable commitments, the story changes. Suppose Boris comes to Alice as she is moments away from tucking in, and says,

Boris: “I’ve programmed the all-knowing, all-seeing, nuclear-bomb-controlling computer to blow up the world unless you give me your sandwich.”

Then Alice would be down one sandwich. In this scenario, Boris programs his computer, shows the code, and makes the threat, in response to which Alice can either comply and get back in line for soup or hold on to her sandwich, in which case the doomsday machine will end existence as there is nothing either Boris or Alice can do to stop it. We see now how these threats can be potent, predicated on a computer with godlike knowledge and untouchable power. This begs the question: could Alice do anything to protect her precious lunch if she too had such powerful technology at her fingertips?

To irrevocable commitments, we add another subtle, but powerful capacity: the ability to condition on the content of other’s commitments. If these computers are all-seeing, even the functioning of other computers would be within their grasp. Rather than pack a lunch, Alice spends the evening hatching a plan and comes to the next day’s activities prepared. At breakfast, where muffins abound and there is no need for hostilities, Alice approaches Boris and tells him of her creation,

Alice: “We, too, have an all-seeing, all-knowing computer with the means to initiate nuclear armageddon. It’s so powerful that it can see the programs you write on your computer, so if you so much as try to order your computer to end the world over a sandwich, my computer will hold onto my sandwich and refuse to give it up, forcing yours to follow through on your threat.”

Sure enough, at lunch, Alice once again snatches the last sandwich, stores it in a box over which her computer has full control, and waits for Boris to approach. He has the same program ready to send to his computer, but upon reflection, he realizes that Alice has outmaneuvered him and watches enviously as she devours today’s last Tuna Melt.

Absolute Commitments

We have seen in the example of Alice and Boris that these are both very powerful and that the party that makes their commitments first has a distinct advantage. We define absolute commitments to be those that are irrevocable and that have the capacity to condition on the content of other commitments.The premise in which these types of commitments are viable requires stringent assumptions and it is not immediately clear that this is a realistic scenario. Blockchains do not have the nuclear codes (yet), but we note that smart contracts are both irrevocable and could be made to condition on the content of other’s contracts, information that is public on the blockchain. In a recent series of works [1-3], we establish subtle attacks on several decentralized systems that make use of the power of being able to absolutely commit to strategies using smart contracts. It is the goal of this blog post to give the reader a thorough explanation of these recent results, with fewer technical details than are given in the papers.

The main premise is the following. In a strategic game, an agent may - in principle - deploy a smart contract to act on their behalf in said game. By security of the underlying blockchain, this smart contract irrevocably commits the agent to their strategy. This changes the game-theoretic assumptions. In particular, this enables an agent to commit to acting irrationally in certain situations, thus making credible otherwise non-credible threats. Moreover, since smart contracts are public, they can reason about each other, and so the commitments of one agent may depend on the commitments made by another agent. This has implications for the incentive-compatibility of blockchain-based systems, since the analysis done without taking these commitments into account does not necessarily apply when the commitments are added. The functioning of many blockchain systems depends on incentives, so a rupture in expected behavior could be detrimental to these systems.

A strategy that makes use of these commitments is known as a Stackelberg attack. We call those games that resist such attacks Stackelberg resilient. In particular, a game that is Stackelberg resilient must be so for any order of contracts. As we have seen, the order in which the commitments are made can impact the viability of an attack, so it is not trivial to require that a game must resist any order. The concern is warranted: in [1] we establish a Stackelberg attack on EIP-1559 (or auctions more generally) whereby the miner loses all of their revenue. Similarly, in [3] we establish a Stackelberg attack that allows the miner to extract full MEV from all users. In both cases, a successful attack would significantly deteriorate the quality of the affected chain. In [2], we show a potential attack for one on-chain escrow contract, but show that another remains resilient.

A Stackelberg Attack on EIP-1559

We discuss the implications of absolute commitments on multiple games that are relevant on-chain in our body of work and find attacks in most cases. The reasoning about these types of attacks and commitments is subtle and can be counterintuitive. We have seen, in our example of Boris and Alice, that these are potent attacks only if conditions are right. We now focus on the attack levied against auctions, which underpin key infrastructure, including the Ethereum fee mechanism, as discussed in [1]. While we do not believe that such an attack is currently feasible, it is important to explore the potential destructive power of Stackelberg attacks before they become viable.

To understand this attack, we first have to sketch the functioning of the auction fee mechanism. We will focus on EIP-1559, though we stress that the attack works also against larger classes of auctions. In our simplified model of the fee mechanism from EIP-1559, we imagine some number of agents hoping to get their transactions on the blockchain. We assume that there are more potential blockchain users than there are slots, not because the converse does not happen, but because it is uninteresting for our purposes. At this level, we do not differentiate between the different types of transactions other than that they give each agent some idiosyncratic level of utility. In particular, we assume the transactions all require the same amount of space. In addition to the base fee, which is burned, the agents can tip the miner. The miner then selects the transactions with the top tips until their block is full (we ignore other incentives such as MEV in this example). This is then an m-good first-price auction, where there are m slots in the block and each included user pays their bid. In the case without absolute commitments and in which the agents know each other’s valuations, the well known equilibrium is for the m agents with the highest valuations to bid the valuation of agent m+1 (the last agent left out of the block) plus some small amount ε. Everyone below that valuation will not win, and if they did, they would pay more than their valuation and thus reap negative utility. Everyone above pays the least amount possible to make sure they will be included, because there is exactly enough space for everyone willing to pay that much.

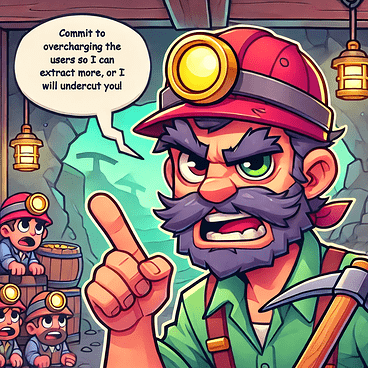

Image generated by DALL-E.

To introduce the attack, let us simplify the story to three agents and two block slots, i.e., m = 2. Label these agents 1, 2, and 3 and give them valuations 0 < v1 < v2 < v3. In the regular setting both agents 2 and 3 will bid v1 + ε, and be included, while agent 1 will always be outbid and can bid their valuation of v1 or 0 and will get no utility in any case. Now, let us further assume that agent 3 has the first contract and the other two have contracts in any order. Agent 3 might make the following threat,

Agent 3: “I commit to bidding 2ε if you commit to bidding some small amount ε; otherwise I will revert to my usual bid of v1 + ε.”

At first glance, this is not much of a threat. The bad scenario is just reverting to what we had before and that worked just fine. If the other two agents do comply, they will both commit to bid ε while agent 3 can bid ever so slightly higher. Then agent 3 will be included almost for free, while the other two are entered into a lottery for the remaining slot, again almost for free. Thus, it could be quite lucrative to comply with this threat as one of the later agents. That said, this is not always a viable threat. Imagine, for example, that agent 2 has a high valuation and agent 1 a very low one. Then the expected utility for agent 2, if they comply, is (v2 - ε)/2, i.e. their valuation minus ε, given that they will win the lottery half the time. This valuation could easily be lower than the valuation in the original case, where they must bid more but are guaranteed to get the slot, formally (v2 - v1). It is clear that if the valuations of agents 1 and 2 are close, the commitment case is better, and that not complying is better when agent 1 has a much lower valuation as compared to agent 2. Since we assume that the agents know all the valuations - this can be estimated in practice by looking at the tips in previous blocks - the valuations and the choices of the others is also something agent 3 would know. Thus, agent 3 can know when their threat is viable. The extension to many agents is straightforward and we direct the curious reader to [1]. While all the agents get better utility if they comply by assumption, such a regime would be very bad for the miners, who would see a steep fall in revenue.

It is interesting to note that, unlike Boris’s threats from before, nothing too bad happens if the threat is actually carried out. This means that there is little risk of committing to the threat in the case where it might not be seen by other agents or there is some other exogenous reason that not everyone will see and comply, even if that is the incentive compatible option. This threat is therefore particularly robust.

We consider MEV in a similar light in [3]. Here we give the miners contracts and the users take a more passive role. The miners and their order are known ahead of time and the next miner is in a position of power. Again we will see that there is a way in which the first agent can use some built-in advantage to extract a better scenario from those around them. In particular, the first miner threatens the followers into committing to excluding transactions, thus allowing the next miner to press customers for even more, lest they be excluded in future blocks. If the following miners do not comply with the threat, the next miner then undercuts them by offering blockspace without extracting MEV, which is desirable for users but means the later miners miss out on MEV income. This description simplifies the type of control that both miners and users have, but with the right broadcasting of information, this could be a concern.

Image generated by DALL-E.

One might wonder if there are any games that withstand such attacks or if there are any facts that might illuminate the workings of these attacks. In general, we show that determining if a game is resilient to such attacks is computationally intractable [2]. However, we find that there are games that withstand such attacks and there are games that do not. Both of the games under scrutiny in [2] are escrow games, and the difference between the game with resistance and that without are subtle. The key idea of these attacks is that there is some viable threat that the attacker can make to the other user. The threat can be bad for everyone, even the agent making the attack, but will presumably never take place. There must also be something that the attacker can stand to gain by executing the attack. While we can prove Stackelberg resilience of one of the escrow contracts, we do not fully understand what generally makes contracts resilient, while so many are not. We hope the community can help us find out how to design Stackelberg resilient contracts.

Practical Considerations

We believe these attacks are not (yet) viable in practice for multiple reasons. Firstly, if these attacks were possible, they have the potential to be highly lucrative and they would probably have already destroyed certain blockchain systems (the authors of this blog post have yet to become millionaires, if that is any indication). Moreover, the virtual machines of current blockchain systems do not support the instructions (opcodes) required to mount such an attack. As an example, in the EVM it is not possible to make the fees/tips conditional on the state of a smart contract or anything else. Rather, these tips and fees must be specified by the user when the contract is published, or when certain instructions are executed. Similarly, it is very hard for a contract to inspect whether another contract has been deployed let alone the precise nature of the contract. In practice, this could be implemented using a timer and a subroutine that inspects the chain to see if a certain address has published a certain smart contract. As for the content of other contracts, Rice’s theorem.) famously states it is undecidable for a program to determine if another program behaves a certain way. However, the contract of agent A need not check for semantic equivalence, but could simply check whether agent B publishes a contract with a specific hash output. Finally, an attack cannot work unless the other agents know about the attack so perhaps we should all pretend we never thought of this. Integrating smart contract/commitment capabilities into systems is becoming more prevalent, as exemplified by Uniswap recently adding smart contract capabilities to their system (so-called Hooks), and it is not inconceivable that these attacks may one day become feasible. If that day ever comes, it is our hope the community will have developed means to thwart these attacks.

To summarize, we believe that Stackelberg attacks cannot credibly be implemented today. However, it is our worry that future enhanced capabilities of these systems may one day enable such attacks. Indeed, since the potential monetary gain is so large, we expect such attacks to be prevalent if they are at all possible. We would thus like to draw attention to this issue while it still remains theoretical, in hopes that the community can find ways to mitigate the issue. Unfortunately, we fear the core issue is inherently ‘unfixable,’ in the sense that these attacks are too powerful to systematically avoid. We thus sincerely hope these attacks can never be realized in practice.

References

[1] Daji Landis and Nikolaj I. Schwartzbach. (2023). Stackelberg Attacks on Auctions and Blockchain Transaction Fee Mechanisms. In Frontiers in Artificial Intelligence and Applications (ECAI ‘23). IOS Press. link to pdf

[2] Daji Landis and Nikolaj I. Schwartzbach. (2024). Which Games are Unaffected by Absolute Commitments? In Proceedings of the 23rd International Conference on Autonomous Agents and Multiagent Systems (AAMAS '24). link to pdf

[3] Daji Landis and Nikolaj I. Schwartzbach. (2024). Optimal MEV Extraction Using Absolute Commitments. link to pdf

[4] Mathias Hall-Andersen and Nikolaj I. Schwartzbach (2021). Game Theory on the Blockchain: A Model for Games with Smart Contracts. In Algorithmic Game Theory (pp. 156–170). link to pdf