Clarification of Premises

To keep the discussion fair and fundamental, it’s important to clearify the difference in circumstances between when we started discussing PoS (Casper FFG) and committee-based finality, and the present.

- Due to the convenience of liquidity for restaking/LSD tokens, pools have become incentivized towards a winner-takes-all tendency.

- The emergence of temporary and inexpensive block space due to Proto-danksharding.

In these changing circumstances, the trade-offs of the proposed philosophical pivot approaches (1), (2), and (3) in VB’s post are:

(1) The protocol becomes very simple and efficient. The number of nodes itself decreases, and the pool management methods may become complicated depending on the situation.

(2) The protocol itself maintains its complexity. The efficiency of block space increases, and censorship resistance is maintained as it is.

(3) The complexity of the protocol itself increases compared to before. The barrier to entry for staking is reduced.

Overall, it seems that the main challenges are improving the situation where the 32 ETH upper (not lower) limit is no longer meaningful, and it’s hindering efficiency in block space and bandwidth, and addressing the current concentration in pools.

Clarification and Resolutions of the Problems

Problem 1: DVT should be made simple, excluding cryptography.

Fundamentally, the ‘go all-in on decentralized staking pools’ approach of philosophical pivot option (1) aims to balance solo staking with large-scale pools. While this may sacrifice liveness, it ensures that safety is always maintained. This characteristic aligns with switchable PoW mining pools. It could be said that this is a direction already proven to work.

One question arises regarding the link mentioned: Are technologies like MPC and SSS really necessary for DVT? In my view, what’s essential is the ability to switch pools, and for that, only the following two components are necessary:

- The ability to invalidate a signing key within two blocks.

- The inability of pools to discern whether a solo staker is online.

As it’s been discussed a lot in the Ethereum PoS discussion space, the safety of PoW is secure because if someone attempts to control 51%, miners can notice that a fork chain is being mined by a pool and switch pools accordingly. In the current PoS system, since the signing key is delegated to the pool, one might become an attacker against her will and just watch herself being slashed. To change this, the holder of the withdraw key should be able to immediately cancel their signing key if they discover it’s being used for double voting. In implementing this principle, a finality of about 2 slots, rather than SSF, seems preferable.

Once this principle is introduced, as long as pools cannot determine whether solo stakers are online, they would be too fearful to attempt double voting. This would allow solo stakers to go offline. Of course, there’s a possibility that pools might speculatively attempt double voting, but as with the current PoS system, attackers would not recover after being slashed, making it not worthwhile.

Problem 2: Few Solo Stakers

The reason for the lack of solo stakers is their lack of confidence in their network environment, even before considering the risk of slashing. Moreover, an increase in solo stakers on AWS is essentially meaningless from the perspective of decentralization. These issues can be resolved by the specific measures mentioned in the above DVT (problem 1) section, which is to create a state where solo stakers are indistinguishable as being online or offline and are delegating to pools.

An important point is that solo stakers going offline does not guarantee absolute safety from being slashed; it only significantly reduces the likelihood of being slashed. This characteristic is a major difference from the current solo staking situation, where going offline often leads to a high probability of being slashed.

Problem 3: Block Size

One reason for the large block size in PoS is the presence of a bit array indicating whether each signer has signed. (The signatures themselves can be discarded some time after block approval.)

The reasons for this array include:

- To prevent rogue key attacks.

- For use in slashing.

- For reward calculations.

For 1) and 2), like signatures, it should be no problem to discard them after they are sufficiently approved. However, with 3), especially now with Proto-danksharding, it seems possible to balance reward calculation and block space reduction by publicly displaying the Merkle tree for a certain period and then discarding it.

Specifical steps:

- Divide the bit array, which flags the signers, into several parts and make them leaves of a Merkle Tree.

- Place all the leaves of the Merkle Tree in a blob.

- Include only the Merkle Root in the block.

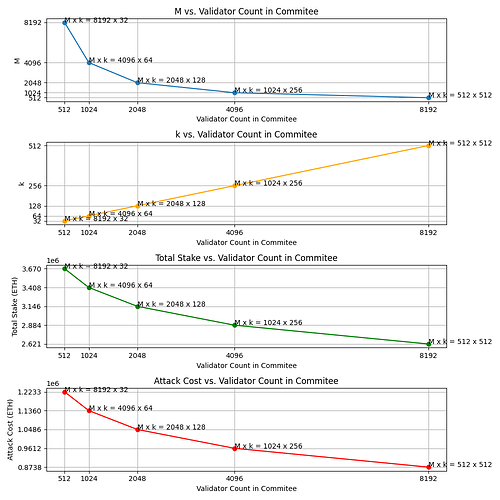

Each person can use a Merkle proof to prove their rewards, and with Recursive ZKP, all can be combined into a single withdrawal transaction. This could potentially reduce the size from 8192 bits to about 256 bits. If any wrong root, the majority of the validators always can ignore the block.

Problem 4: Censorship Resistance

It is discussed in this thread that solo stakers, who produce blocks without going through pools, are key to censorship resistance in the core protocol.

Personally, I believe this is a drawback of stakers not being able to switch pools. Once they can switch as per the above procedure, it simply becomes a matter of stakers not choosing pools that have implemented censorship programs. Generally, addresses that are censored are likely to pay higher fees to get through, and market principles should ensure higher profits for pool operators who do not censor. Therefore, the approach (1) can maintain a degree of censorship resistance.

Problem 5: MEV

This is a problem that wouldn’t be discussed so lightly if it were easy to solve. However, as long as the Rollup Centric Roadmap continues, it seems appropriate to support Layer 2 solutions that aim for Based Rollup in the protocol, if there is an opportunity to do so.

TL;DR of my personal opinion

Adopting Approach 1 (DVT) with switching pools is the easiest way to support solo stakers and to minimize the block size. It actually leaves almost no problem. The pool managers can not use the stake to perform a 51% attack if and only if the withdrawal keys can stop it and the pool can not tell whether or not the withdrawal key holders are online to keep watching the behaviors of the pool. The others are also worth considering, but Approach (1) is what we can call the simplification of PoS.

The matter is how to make the switchable staking pool with the shortest finality. I guess it takes 2 slots.