Special thanks to Justin Drake, Elias Tazartes, Clément Walter, Rami Khalil for review and feedback.

TL;DR

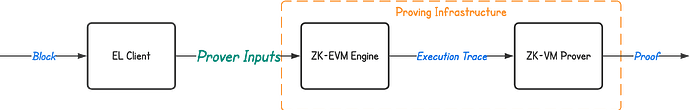

On the path towards enabling real-time block proving, this post proposes a unified data format for the input that ZK-EVM prover engines should accept to prove an Ethereum Execution Layer (or EVM) block (see article by Vitalik for context about Validity proofs of EVM execution).

Note: This article focuses only on the input for ZK-EVM to generate Execution Layer proofs and does not address the Consensus Layer, which is considered an independent problem.

Motivation

The ZK-EVM block proving ecosystem is rapidly expanding, with various teams implementing their own ZK-EVM engines, such as Keth (Cairo) by Kakarot, Zeth (RiscV) by RiscZero, and RSP (RiscV) by Succinct.

While all these ZK-EVMs aim to prove the same EVM blocks, they currently accept unique, vendor-specific inputs. This hinders operators who need to work with multiple vendors and does not allow to achieve a neutral and open supply chain for real-time block proving.

This post proposes a standardized EVM data format for ZK-EVM Prover Input that:

- EL clients can generate for every block.

- ZK-EVM provers accept to generate EL proofs.

The Prover Input format aims to facilitate the creation of a neutral supply chain for real-time block proving by:

- Facilitating multi-proof proving infrastructure: Allowing operators to work seamlessly with multiple ZK-EVM prover vendors by always passing the same input to every ZK-EVM prover engine.

- Enabling the creation of a standard interface: Between EL clients (Execution Layer full clients executing block) and the multiple proving infrastructures running ZK-EVM(s) prover (proving the blocks).

ZK-EVM Prover Input

Workflow

When dealing with ZK-EVMs, we can broadly distinguish three types of data for generating the block’s proof:

- The Prover Input: The EVM data required by the ZK-EVM engine to execute the block and generate the Execution Trace. This is vendor-agnostic.

- The Execution Trace: An intermediary input generated by the ZK-EVM engine for each block, required by the proving engine to generate the final proof. This is vendor-specific.

- The Proof: The final output of the prover, the proofs execution and state transition. This is the vendor-specific

Statelessness

ZK-EVM proving engines, when proving a block, operate in a stateless environment without direct access to a full node. Therefore, the Prover Input must include the block witness, which consists of the minimal state and chain data required for EVM block execution (executing all transactions in the block, applying fee rewards, and deriving the post-state root).

Prover Input Data

We propose the following format for Prover Input data:

| Item | Description | Rationale |

|---|---|---|

| Version | A string field indicating the version of the prover input. | The required prover input may evolve over time, so the format needs to be versioned. Another use case is to enable to extend the existing format for other EVM chains to include some specific data. |

| Blocks | Blocks to be proven, including: • Header of the block to be proven. • Transactions: The list of the RLP-encoded transactions of the block to be proven. • Uncles: The list of uncle block headers. • Withdrawals: The list of validator withdrawals for the block. |

Necessary to compute the block hash, create the EVM Context, execute transactions and finalize the block. |

| Blocks’ Witness | Minimal state and chain data required for EVM block execution, including: • Pre-State: The list of all RLP-encoded Merkle Patricia Trie nodes resolved during block execution (from both the account trie and the storage tries, mixed in a single list). • Codes: The list of all smart contract bytecodes called during block execution. “• Ancestors: The list of block headers, containing at least the direct parent of every block and optionally older ancestors if accessed with the BLOCKHASH opcode during block execution.” |

Necessary data to execute the blocks. |

| Chain Configuration | The chain configuration, including all hard forks. | The proving infrastructure will generate proofs for multiple chains. Passing the chain configuration in the Prover Input enables a ZK-EVM instance running in a proving infrastructure to be chain-agnostic and generate proofs for multiple chains. |

Note: This does not include the

total_difficultyaccrued as of the parent block, which is necessary for fork selection in a pre-merge context. Assuming proving pre-merge blocks is not absolutely necessary, we keep this open for discussion.

In JSON format, it gives:

{

"version": "...",

"blocks": [

{

"header": {...},

"transactions": [

"0x...",

...

],

"uncles": [{...},...],

"withdrawals": [{...},...]

}

],

"witness": {

"state": [

"0x....",

...

],

"codes": [

"0x....",

...

],

"ancestors": [

{...},

...

]

},

"chainConfig": {

"chainId": ...,

"homesteadBlock": ...,

"daoForkBlock": ...,

"daoForkSupport": ...,

"eip150Block": ...,

...

}

}

Prover Input Format

We are currently evaluating various options for Prover Input formats (e.g., Protobuf, SSZ, RLP) and compression (e.g., gzip). The primary goals are to:

- Reduce Prover Input size: Speed up network communication, which is crucial for real-time block proving.

- Ensure compatibility: Support all major programming languages and existing blockchain tooling.

Batching Blocks in a Single Prover Input

To enable batching and optimize proving costs, we propose a Prover Input containing a list of blocks (instead of a single block).

This allows setting the witness for all the blocks at once, so there is no duplication when multiple blocks access the same account, storage, headers, or codes.

In the context of real-time block proving, which requires a proof per block, the prover input will contain only a single block, but other use cases may benefit from a list.

Storing Prover Input

Beyond posting Prover Input to proving infrastructure for real-time block proving, Prover Input can be reused for other use cases by any agents. We are considering options to also store Prover Inputs on:

- Data Availability layers (e.g., Avail, Celestia): Ideal for making input available temporarily, allowing all provers to generate proofs before purging the data.

- A shared data bucket (e.g., S3): Public for reads, with policies in place to freeze older data.

Extending to Other EVM Chains

The Prover Input format can be easily extended to other EVM chains by using a specific version. For example, in the case of Optimism, the OP-specific chain configuration fields would also be included.