Author: Max Resnick

Acknowledgements: @mikeneuder, @timbeiko, @gakonst, @mmp, and Tivas Gupta for helpful comments.

Blob EIPs and Minimum Validator Requirements

On the last execution ACD, a blob target increase as well as some other auxiliary proposals were moved to CFI status.

Those proposals were:

- EIP 7623 (Increase call data costs)

- This EIP increases the gas cost of call data so that the maximum amount of call data possible in a block is lower, reducing the maximum size of a block. Before 4844 call data was how rollups posted their data to the blockchain so increasing the price of call data was not feasible but now that rollups are posting blobs instead we can raise the price of call data to control the max block size without causing problems for rollups.

- EIP 7762 (Increase min_base_fee_per_blob_gas)

- This EIP sets a small reserve price for blobs (~1c) which is designed to increase the speed of price discovery. Each factor of 2 increase in the price of blobs takes almost 6 full blocks to achieve due to the controller implementation so setting this parameter to 2^25 wei rather than 1 wei saves a lot of time for the controller to ramp up.

- EIP 7742 (Uncouple blob count between CL and EL)

- This is mostly a housekeeping change of putting blob count variables in the right place in keeping with the proper separation of concerns between the EL and CL.

- EIP 7691 (increase blob target to 4 from 3, blob limit remains at 6)

- The EIP 4844 fee controller is an integral controller which would work just as well for 4 target, 6 limit as it does for 3 target, 6 limit.

The Pushback

There was some pushback on the call and from solo stakers against these proposals. In particular, solo stakers were worried about the additional bandwidth required to solo propose a block. But if you look at the above proposals those fears may be misplaced. In fact if all the proposals were included together, it would lower the maximum size of the block. Increasing the blob target doesn’t mean increasing the blob limit and the addition of 7623 would lower the maximum size of the non-blob portion of the block payload.

In addition some solo-stakers posted about their poor upload bandwidth speeds which sparked a discussion of minimum validator requirements for solo-staking, especially if they are solo-proposing . Only a small fraction of nodes solo-propose blocks and doing so has a high opportunity cost. Still, let’s take these concerns at face value.

Response to Pushback

First, how much bandwidth does it take to reliably propose a block of size x? The proposer needs their block to reach at least 40% of the network before 4 seconds into the slot. The block propagates through the P2P network but before this can happen, the proposer needs to seed it. It sends the full block to a subset of N of its peers. Sending to more and higher quality peers improves the probability that the block will reach a sufficient portion of the network before timeout.

But as I understand it, the default client implementations have very naive optimization for latency and reliability with peers. In other words, there may be ways that nodes can optimize their block propagation speed without using additional bandwidth.

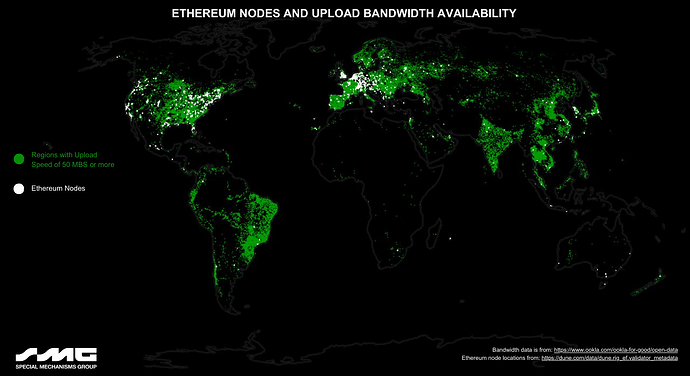

Regardless, extremely poor connections from rural stakers are likely to present a bottleneck in the future so it is important to set internet connection requirements just as we set hardware requirements. I suggest 50Mb/s upload speed as a starting point for these discussions. While we don’t need nearly that much bandwidth today, the goal of the rollup roadmap is to get to 64 blobs per block so, even with optimizations coming with PeerDAS, we would have room for significant scaling in the future. Furthermore, 50MB upload speed on consumer internet is broadly available in North and South America, Asia and Europe. Africa also has substantial (albeit much less comprehensive) access at this speed range. This speed range will therefore give the network substantial headroom to grow, while still allowing for desirable levels of geographic decentralization.

Proposals to Decrease The Minimum Stake

On Twitter, Vitalik proposed lowering the stake required to run a node. I think this would be a bad idea. There are two reasons we have stake, the first is accountability (slashing for bad behavior), and the second is sybil proofness (you need stake to participate). Lowering the minimum staking requirement would be bad on the margin because we already have far too many signatures to aggregate for finality. Each additional signature imposes a cost on the network by introducing another signature that must be aggregated each epoch. The current minimum stake seems about right to me and hopefully we see a large reduction in the node count after MAXEB in the next hardfork. Further, out-of-protocol solutions already allow solo-stakers to stake with substantially lower collateral.