Casper FFG: Returns, Deposits & Market Cap

Link to working draft which includes images

The Fixed Income Approach

The proposed approach for incentivizing Casper largely follows fixed income asset modeling. In a fixed income asset, there’s a fixed amount of income that is paid out in regular intervals. Any qualifying actor can choose to participate or leave the mechanism, which determines the yield (income as a % of deposits) of the participants. The more demand there is, the yield becomes competed away and becomes lower. The less demand there is, the higher the yield becomes.

This allows for two key things. First, it is a natural way for the market to determine the required return for a validator set. If the market determines the mechanism to be “risky” enough for a 5% return vs a 15% return or a 50% return, we will be able to observe that empirically by observing the participation of the validators (explained further below). Second, once we have a good understanding of the required return for a given game, we can incentivize a desired level of total deposits in the network by setting the corresponding fixed income reward.

1. Required Return as Determined by the Market

Let’s take a simple example. Let’s say Bob loans Alice $1,000 and says the interest payment is $100 per year for five years. At that moment, Alice’s perceived annualized “discount rate” or required return for this investment is 10% ($100 / $1000).

Now let’s assume that this contract can be bought and sold openly. If others believed that a 10% yield is a good deal, then they’d be willing to pay Bob more than $1000 for this piece of paper. Let’s say Connor buys the contract for $1100. It still pays $100, but now the yield is 9% ($100 / $1100). Conversely, if it turns out Alice just missed a credit card payment, people might view loaning money to Alice as a risky endeavor, and sell it at a lower price of $900 to derisk their position–increasing the yield to 11%.

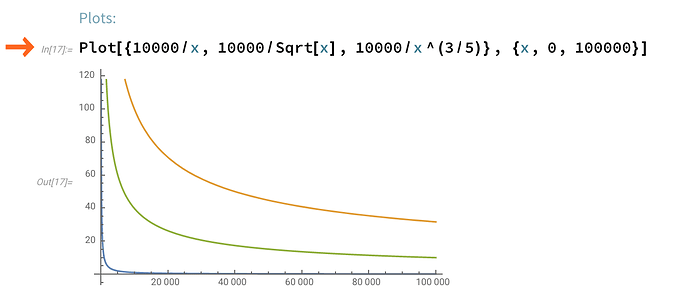

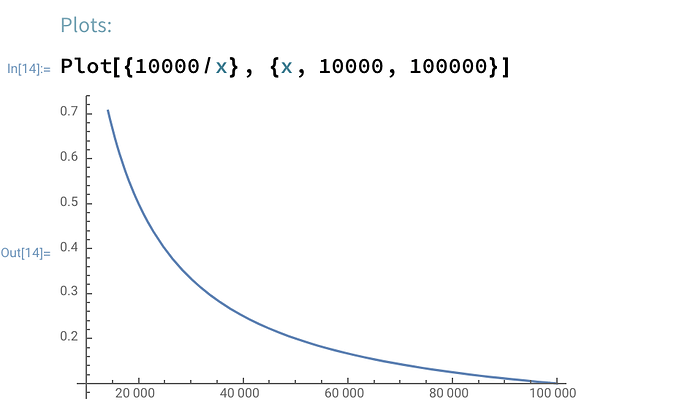

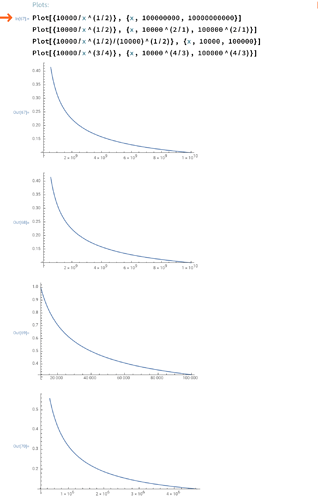

Therefore, a fixed income asset is an ideal instrument to assess the perceived market risk of a given game. For an early version of Casper, you might imagine, we can set Y(1, 0, TD) = \frac{Fixed Income}{TD} to test the yield (with a hypothesis, of course). For a given fixed income, it will attract a commensurate amount of TD to reflect the “risk” associated with the mechanism.

2. Incentivizing a Total Deposit Level

Once there’s a strong hypothesis or empirical evidence of the mechanism’s required return, the mechanism designers can incentivize a desired total deposit level for the network.

So, let’s say that the desired total deposit level is $100M and the previously observed annualized validator yield is 20%, then the architect may target a $20M annual sum of rewards. That way, if the deposit level is lower (say $80M), then then excess returns to the validators will attract more deposits to participate in the network to capture that excess yield, which will drive towards the desired total deposit level.

[If bootstrapping the network, we can set a progressive reward increase with an asymptote at the targeted reward level (i.e. $20M in the example above) so that the initial rewards aren’t excessively high. ($20M reward with $1M deposits would return 20x, which would be too much). For example, the mirroring mechanism here could be a target max return (“size of the carrot”). For example, we could have a max incentive yield of 50%, so every time the market approaches 20% yield with TD < desired level, we can boost up the yield back up to 50% (but ideally a smoother version of this).]

3. Total Deposits vs Market Cap

The total deposit level that will drive the fixed income level shouldn’t be thought of in dollar amounts but more precisely as a % of Market Cap, since that is the value that the validators are ultimately trying to protect. So depending on the security constraints and design decisions, one may have–for example–anywhere between 1-25% as a target for TD/MarketCap.

Let’s say that target is 1% during an early hybrid implementation such as the first FFG implementation. Then, at a $30B market cap, that would be about $300M in deposits (~1M ETH) and at a 20% yield level, that would mean $60M in (200K ETH) awarded annually via issuance (fixed level, implying ~0.2% incremental issuance to current levels).

Lastly, the change in the market cap (i.e. the price of ETH) affects the required return mentioned in part 1 because the required return of the validator is more precisely the sum of appreciation of ETH + validation yield (full circle!). In sum, required return of validators, total deposits and market cap all have a dynamic and interrelated relationship.

Summary

This relationship between required returns, total deposits and market capitalization will be instrumental in understanding the “monetary policy” levers available to the mechanism designers.

Also related to this, we will be posting some thoughts on CAPM, standard deviation of validator returns, soft/draconian slashing and required returns.

, but I’d be interested in seeing the case for different values of this hashed out.

, but I’d be interested in seeing the case for different values of this hashed out.