Co-authored by Lin Oshitani, Conor McMenamin, Anshu Jalan, and Ahmad Bitar, all Nethermind. Thanks to Brecht Davos, Jeff Walsh, and Daniel Wang from Taiko for their feedback. Feedback is not necessarily an endorsement.

Summary

Rollups can import the L1 state root into L2 to facilitate message passing between the two layers, such as user deposits and cross-chain contract calls. However, because the EVM cannot access the state root of the current block, rollups can only pull in state roots from past blocks. This restriction makes it impossible for rollups to process L1→L2 messages within the same slot using the state root alone.

To overcome this limitation, this post introduces a protocol that enables the L2 proposer to selectively inject L1 messages emitted in the same slot directly into L2, bypassing the need to wait for a state root import. By combining this protocol with the L2→L1 withdrawal mechanism discussed in our previous post, users can execute composable L1<>L2 bundles, such as depositing ETH from L1, swapping it for USDC on L2, and withdrawing back to L1—all within a single slot.

Terminology

We use the terminology from the Taiko protocol, on which this research is based.

- Messages (a.k.a, Signals): Units of data exchanged between chains to facilitate interoperability, such as transferring tokens or executing cross-chain contract calls. Messages are emitted on the source chain and consumed on the destination chain.

- Same-Slot Messages: Messages emitted in the same slot as the L2 block proposal.

- Message Service Contract: A contract deployed on both L1 and L2 to facilitate message passing between the two layers. Users emit messages in the L1 message service contract and consume them in the L2 message service contract (and vice versa).

- Anchor block: The anchor block is the historical L1 block referenced by an L2 block to import the L1 state root into the L2 environment, effectively “anchoring” the L2 execution to a specific L1 state root. Note that the anchor block must be one slot or more in the past as the EVM does not have access to the current block header.

The Protocol

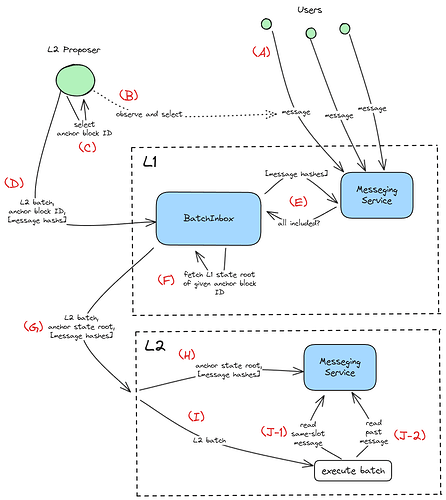

Below is a high-level diagram of the protocol:

The flow is explained below, with bolded text highlighting the components newly introduced by this proposal for enabling same-slot message passing. The non-bolded text follows what is implemented in the current Taiko protocol.

- (A) Users invoke the L1 message service contract to initiate the L1→L2 message transfer.

- The messages are hashed and stored in the L1 message service contract.

- The L2 proposer:

- (B) Selects which same-slot messages to import into L2.

- (C) Selects the anchor block ID, which is the L1 block ID of the anchor block. This determines which historical L1 block’s state root will be imported into L2.

- (D) Submits the selected same-slot message hashes to the batch inbox contract, the L1 anchor block ID, and the L2 batch to the batch inbox contract.

- The batch inbox contract:

- (E) Verify with the L1 message service that the message hashes have been recorded. If the verification fails, the L2 batch proposal is reverted. The messages

- (F) Fetch the L1 anchor state root (block header incorporating the state root to be accurate) of the given L1 anchor block ID via the BLOCKHASH opcode. The anchor block must be at least one slot in the past, as the EVM cannot access the current block header.

- (G) Emit an event containing the message hashes, the L2 batch, and the anchor state root, allowing the L2 execution to access and process them.

- The L2 execution will:

- (H) Import the message hashes and the L1 anchor state root into the L2 message contract.

- (I) The batch is executed. L2 transactions in the batch can:

- (J-1) Consume the imported same-slot messages by calling the L2 message contract. Note that no Merkle proof is needed in this case, as the message hashes can be stored in a more easily retrievable way in the L2 message contract.

- (J-2) Consume messages emitted in blocks at or before the anchor block ID. Users (or relays acting on their behalf) achieve this by submitting the original message and Merkle proof to the L2 message contract. This proof verifies that the message was emitted on L1 using the anchor state root.

Note that the message service contracts do not provide native replay detection, and it delegates this responsibility to the applications that consume the messages

Next, we will explore the key features of this protocol that shape its design decisions.

Selective Message Imports

An important feature of this protocol is that L2 proposers can choose which same-slot L1→L2 messages to import into their L2 blocks. This ensures:

- State Determinism: Proposers maintain full control over the post-execution L2 state root of their L2 batch by avoiding unexpected state changes due to unanticipated inbound messages from L1. This is especially important for enabling same-slot message passing even when the L2 proposer is not the L1 proposer/builder.

- Cost Management: Proposers can choose to import only same-slot messages that compensate for the additional L1 gas cost required to process them. Additionally, block proposals will have no gas cost overhead when no same-slot messages are imported.

Conditioning

Furthermore, note that the batch proposal transaction reverts if the specified same-slot messages were not emitted in L1 (see (C) in the protocol description). This lets the L2 proposer trustlessly condition their L2 batch on the dependent same-slot messages.

Tying a Loop: L1→L2→L1

Suppose an L1 user wants to deposit ETH from L1, swap it into USDC on L2, and withdraw back to L1—all within the same slot. This can be achieved by combining this proposal with a same-slot L2→L1 withdrawal mechanism we introduced in Fast (and Slow) L2→L1 Withdrawals. Specifically, the L2 proposer can submit an L1 bundle containing the following three transactions:

- An L1 transaction by the user that emits the message for depositing ETH into the L2.

- An L2 batch proposal transaction by the L2 proposer that imports the above deposit message and includes:

- The DEX trade by the user that swaps the ETH into USDC.

- The withdrawal transaction by the user that sends the USDC back to L1.

- An L1 solution transaction by a solver that conducts the withdrawal.

Are Shared Sequencers/Builders Needed Here?

It’s important to note that the L2 proposer does not have to be the L1 builder in the above L1→L2→L1 scenario. That is, a shared sequencer/builder is not needed. What matters is that the bundle is executed atomically—either all transactions in the bundle succeed or the bundle is not included at all. This atomicity can be achieved through builder RPCs like eth_sendBundle or via EIP-7702 (once implemented), ensuring that the L1→L2→L1 bundle will execute atomically within the same slot. In other words, the L2 proposer does not need to know exactly the L1 state in which the L2 batch is executed. Instead, the L2 proposer just needs to know “enough” L1 state—specifically, the imported same-slot messages—to execute the L2 batch.

This means that there isn’t necessarily a need for the rollup to be “based” in the sense of having a shared sequencer between L1 and L2, which is one of the value propositions of based rollups. However, relying on same-slot L1 messages for L2 execution creates a tight coupling between L1 and L2. As a result, the L2 would need to “reorg together” with the L1 during reorganization events. In practice, only based rollups would accept such reorgs.

Future Directions

Incentivization

The current protocol does not introduce mechanisms to incentivize the L2 proposers to include and consume same-slot messages. These incentives can be implemented on the L2 execution side. We can introduce features like L2 transactions conditioned on inclusion in specific L2 blocks or those with a “decaying” priority fee. Note that such a feature will also be important to solve the fair exchange problem for preconfirmations, as both preconfirmations and same-slot message passing aim to incentivize proposers to include transactions early.

Towards Arbitrary Reads: Taiko Gwyneth

One limitation of this protocol is that the L2 can only read the L1 state of the messaging service contract within the same slot. Can we get reads for arbitrary L1 state, not just the message service contract state, to enable more seamless composability between the L1 and L2?

Gwyneth, a new exciting protocol being developed by the Taiko team, aims to enable such arbitrary same-slot L1 state reads from the L2. An interesting approach under consideration involves introducing a new “introspection precompile” (EIP-7814) that exposes the current transaction trie and opcode counter to the EVM. With this information available, the batch inbox contract can fetch the transaction trie and opcode counter and pass them to the L2 execution. This would enable the L2 to simulate the entire L1 execution up to the batch proposal and compute the middle-of-the-slot L1 state root at the exact opcode counter of the batch proposal.

Read more about Gwyneth’s design here.

Appendix: Gas Cost

A rough estimate of L1 gas per each same-slot message import is as follows:

- 32 bytes * 16 gas/bytes = 512 gas for the message hash in call data.

- 2600 gas for a function call from inbox to L1 message service. We can batch-call the message service for all messages to share this cost.

- 1 SLOAD = 2100 gas for reading the message hash in the message service

Hence 512 + 2100 = 2612 gas, plus the 2600 gas cost for calling message service shared among the messages. Furthermore, if we enable L2 proposers to free EVM slots of messages they consumed for same-slot inclusion, they can receive a gas refund, compensating for the additional gas cost.